Embark on a journey to master the fundamentals of Kubernetes with our comprehensive guide.

Kubernetes Basics and Architecture

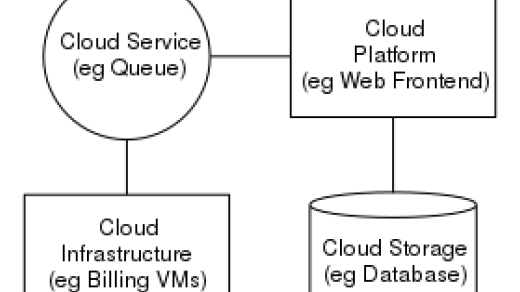

Kubernetes is a powerful open-source platform that automates the deployment, scaling, and management of containerized applications. Understanding its basics and architecture is crucial for anyone looking to work with Kubernetes effectively.

Kubernetes follows a client-server architecture where the Kubernetes master serves as the control plane, managing the cluster and its nodes. The nodes are responsible for running applications and workloads.

Key components of Kubernetes architecture include pods, which are the smallest deployable units that can run containers, and services, which enable communication between different parts of an application.

By learning Kubernetes from scratch, you will gain the skills needed to deploy and manage your applications efficiently in a cloud-native environment. This knowledge is essential for anyone looking to work with modern software development practices like DevOps.

Take the first step towards mastering Kubernetes by diving into its basics and architecture. With the right training and hands-on experience, you can become proficient in leveraging Kubernetes for your projects.

Cluster Setup and Configuration

When setting up and configuring a cluster in Kubernetes, it is essential to understand the key components involved. Begin by installing the necessary software for the cluster, including Kubernetes itself and any other required tools. Use YAML configuration files to define the desired state of your cluster, specifying details such as the number of nodes, networking configurations, and storage options.

Ensure that your cluster is properly configured for high availability, with redundancy built-in to prevent downtime. Implement service discovery mechanisms to enable communication between different parts of your application, and utilize authentication and Transport Layer Security protocols to ensure a secure environment. Familiarize yourself with the command-line interface for Kubernetes to manage and monitor your cluster effectively.

Take advantage of resources such as tutorials, documentation, and online communities to deepen your understanding of Kubernetes and troubleshoot any issues that may arise. Practice setting up and configuring clusters in different environments, such as on-premises servers or cloud platforms like Amazon Web Services or Microsoft Azure. By gaining hands-on experience with cluster setup and configuration, you will build confidence in your ability to work with Kubernetes in a production environment.

Understanding Kubernetes Objects and Resources

Resources, on the other hand, are the computing units within a Kubernetes cluster that are allocated to your objects. This can include CPU, memory, storage, and networking resources. By understanding how to define and manage these resources, you can ensure that your applications run smoothly and efficiently.

When working with Kubernetes objects and resources, it is important to be familiar with the Kubernetes command-line interface (CLI) as well as the YAML syntax for defining objects. Additionally, understanding how to troubleshoot and debug issues within your Kubernetes cluster can help you maintain high availability for your applications.

By mastering the concepts of Kubernetes objects and resources, you can confidently navigate the world of container orchestration and DevOps. Whether you are a seasoned engineer or a beginner looking to expand your knowledge, learning Kubernetes from scratch will provide you with the skills needed to succeed in today’s cloud computing landscape.

Pod Concepts and Features

Each **pod** in Kubernetes has its own unique IP address, allowing them to communicate with other pods in the cluster. Pods can also be replicated and scaled up or down easily to meet application demands. **Pods** are designed to be ephemeral, meaning they can be created, destroyed, and replaced as needed.

Features of pods include **namespace isolation**, which allows for multiple pods to run on the same node without interfering with each other. **Resource isolation** ensures that pods have their own set of resources, such as CPU and memory limits. **Pod** lifecycle management, including creation, deletion, and updates, is also a key feature.

Understanding pod concepts and features is crucial for effectively deploying and managing applications in a Kubernetes environment. By mastering these fundamentals, you will be well-equipped to navigate the world of container orchestration and take your Linux training to the next level.

Implementing Network Policy in Kubernetes

To implement network policy in Kubernetes, start by understanding the concept of network policies, which allow you to control the flow of traffic between pods in your cluster.

By defining network policies, you can specify which pods are allowed to communicate with each other based on labels, namespaces, or other criteria.

To create a network policy, you need to define rules that match the traffic you want to allow or block, such as allowing traffic from pods with a specific label to pods in a certain namespace.

You can then apply these policies to your cluster using kubectl or by creating YAML files that describe the policies you want to enforce.

Once your network policies are in place, you can test them by trying to communicate between pods that should be allowed or blocked according to your rules.

By mastering network policies in Kubernetes, you can ensure that your applications are secure and that traffic flows smoothly within your cluster.

Learning how to implement network policies is a valuable skill for anyone working with Kubernetes, as it allows you to control the behavior of your applications and improve the overall security of your system.

Practice creating and applying network policies in your own Kubernetes cluster to build your confidence and deepen your understanding of how networking works in a cloud-native environment.

Securing a Kubernetes Cluster

Using network policies can help you define how pods can communicate with each other, adding an extra layer of security within your cluster. Implementing Transport Layer Security (TLS) encryption for communication between components can further enhance the security of your Kubernetes cluster. Regularly audit and monitor your cluster for any suspicious activity or unauthorized access.

Consider using a proxy server or service mesh to protect your cluster from distributed denial-of-service (DDoS) attacks and other malicious traffic. Implementing strong authentication mechanisms, such as multi-factor authentication, can help prevent unauthorized access to your cluster. Regularly back up your data and configurations to prevent data loss in case of any unexpected downtime or issues.

Best Practices for Kubernetes Production

When it comes to **Kubernetes production**, there are several **best practices** that can help ensure a smooth and efficient deployment. One of the most important things to keep in mind is **security**. Make sure to secure your **clusters** and **applications** to protect against potential threats.

Another key practice is **monitoring and logging**. By setting up **monitoring tools** and **logging mechanisms**, you can keep track of your **Kubernetes environment** and quickly identify any issues that may arise. This can help with **debugging** and **troubleshooting**, allowing you to address problems before they impact your **production environment**.

**Scaling** is also an important consideration when it comes to **Kubernetes production**. Make sure to set up **autoscaling** to automatically adjust the **resources** allocated to your **applications** based on **demand**. This can help optimize **performance** and **cost-efficiency**.

In addition, it’s crucial to regularly **backup** your **data** and **configurations**. This can help prevent **data loss** and ensure that you can quickly **recover** in the event of a **failure**. Finally, consider implementing **service discovery** to simplify **communication** between **services** in your **Kubernetes environment**.

Capacity Planning and Configuration Management

Capacity planning and **configuration management** are crucial components in effectively managing a Kubernetes environment. Capacity planning involves assessing the resources required to meet the demands of your applications, ensuring optimal performance and scalability. **Configuration management** focuses on maintaining consistency and integrity in the configuration of your Kubernetes clusters, ensuring smooth operations.

To effectively handle capacity planning, it is essential to understand the resource requirements of your applications and predict future needs accurately. This involves monitoring resource usage, analyzing trends, and making informed decisions to scale resources accordingly. **Configuration management** involves defining and enforcing configuration policies, managing changes, and ensuring that all components are properly configured to work together seamlessly.

With proper capacity planning and **configuration management**, you can optimize resource utilization, prevent bottlenecks, and ensure high availability of your applications. By implementing best practices in these areas, you can streamline operations, reduce downtime, and enhance the overall performance of your Kubernetes clusters.

Real-World Case Studies and Failures in Kubernetes

| Case Study/Failure | Description | Solution |

|---|---|---|

| Netflix | Netflix faced issues with pod scalability and resource management in their Kubernetes cluster. | They implemented Horizontal Pod Autoscaling and resource quotas to address these issues. |

| Spotify | Spotify experienced downtime due to misconfigurations in their Kubernetes deployment. | They introduced automated testing and CI/CD processes to catch configuration errors before deployment. |

| Twitter encountered network bottlenecks and performance issues in their Kubernetes cluster. | They optimized network configurations and implemented network policies to improve performance. | |

| Amazon | Amazon faced security vulnerabilities and data breaches in their Kubernetes infrastructure. | They enhanced security measures, implemented network policies, and regularly audited their cluster for vulnerabilities. |