Welcome to our simplified Kubernetes Architecture Tutorial, where we break down the complexities of Kubernetes into easy-to-understand concepts.

Introduction to Kubernetes Architecture

Kubernetes architecture is based on a client-server model, where the server manages the workload and resources. The architecture consists of a control plane and multiple nodes that run the actual applications.

The control plane is responsible for managing the cluster, scheduling applications, scaling workloads, and monitoring the overall health of the cluster. It consists of components like the API server, scheduler, and controller manager.

Nodes are the machines where the applications run. They contain the Kubernetes agent called Kubelet, which communicates with the control plane. Each node also has a container runtime, like Docker, to run the application containers.

Understanding the basic architecture of Kubernetes is crucial for anyone looking to work with containerized applications in a cloud-native environment. By grasping these concepts, you’ll be better equipped to manage and scale your applications effectively.

Cluster Components

| Component | Description |

|---|---|

| Kubelet | Responsible for communication between the master node and worker nodes. It manages containers on the node. |

| Kube Proxy | Handles network routing for services in the cluster. It maintains network rules on nodes. |

| API Server | Acts as the front-end for Kubernetes. It handles requests from clients and communicates with other components. |

| Controller Manager | Monitors the state of the cluster and makes changes to bring the current state closer to the desired state. |

| Etcd | Distributed key-value store that stores cluster data such as configurations, state, and metadata. |

| Scheduler | Assigns workloads to nodes based on resource requirements and other constraints. |

Master Machine Components

Kubernetes architecture revolves around *nodes* and *pods*. Nodes are individual machines in a cluster, while pods are groups of containers running on those nodes. Pods can contain multiple containers that work together to form an application.

*Master components* are crucial in Kubernetes. They manage the overall cluster and make global decisions such as scheduling and scaling. The master components include the *kube-apiserver*, *kube-controller-manager*, and *kube-scheduler*.

The *kube-apiserver* acts as the front-end for the Kubernetes control plane. It validates and configures data for the API. The *kube-controller-manager* runs controller processes to regulate the state of the cluster. The *kube-scheduler* assigns pods to nodes based on resource availability.

Understanding these master machine components is essential for effectively managing a Kubernetes cluster. By grasping their roles and functions, you can optimize your cluster for performance and scalability.

Node Components

Key components include the kubelet, which is the primary **node agent** responsible for managing containers on the node. The kube-proxy facilitates network connectivity for pods. The container runtime, such as Docker or containerd, is used to run containers.

Additionally, nodes have their own **Kubernetes API** server to communicate with the control plane, ensuring seamless coordination between nodes and the cluster. Understanding these components is crucial for effectively managing and scaling your Kubernetes infrastructure.

Persistent Volumes

They decouple storage from the pods, ensuring data remains intact even if the pod is terminated.

This makes it easier to manage data and allows for scalability and replication of storage.

Persistent Volumes can be dynamically provisioned or statically defined based on the needs of your application.

By utilizing Persistent Volumes effectively, you can ensure high availability and reliability for your applications in Kubernetes.

Software Components

Another important software component is the kube-scheduler, which assigns workloads to nodes based on available resources and constraints. The kube-controller-manager acts as the brain of the cluster, monitoring the state of various resources and ensuring they are in the desired state.

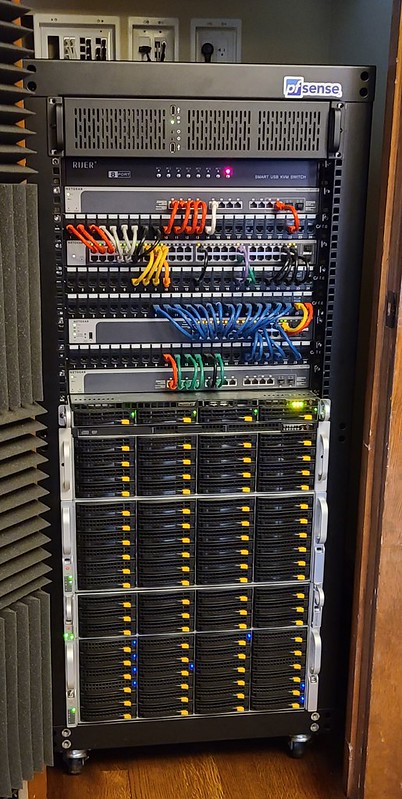

Hardware Components

In a Kubernetes cluster, these hardware components are distributed across multiple **nodes**. Each node consists of its own set of hardware components, making up the overall infrastructure of the cluster. Understanding the hardware components and their distribution is essential for managing workloads effectively.

By optimizing the hardware components and their allocation, you can ensure high availability and performance of your applications running on the Kubernetes cluster. Proper management of hardware resources is key to maintaining a stable and efficient environment for your applications to run smoothly.

Kubernetes Proxy

Kubernetes Proxy acts as a network intermediary between the host machine and the pod, ensuring that incoming traffic is directed correctly. It also helps in load balancing and service discovery within the cluster.

Understanding how the Kubernetes Proxy works is essential for anyone looking to work with Kubernetes architecture. By grasping this concept, you can effectively manage and troubleshoot networking issues within your cluster.

Deployment

Using Kubernetes, you can easily manage the lifecycle of applications, ensuring they run smoothly without downtime. Kubernetes abstracts the underlying infrastructure, allowing you to focus on the application itself. By utilizing **containers** to package applications and their dependencies, Kubernetes streamlines deployment across various environments.

With Kubernetes, you can easily replicate applications to handle increased workload and ensure high availability. Additionally, Kubernetes provides tools for monitoring and managing applications, making deployment a seamless process.

Ingress

Using Ingress simplifies the process of managing external access to applications running on Kubernetes, making it easier to handle traffic routing, load balancing, and SSL termination.

By configuring Ingress resources, users can define how traffic should be directed to different services based on factors such as hostnames, paths, or headers.

Ingress controllers, such as NGINX or Traefik, are responsible for implementing the rules defined in Ingress resources and managing the traffic flow within the cluster.