Welcome to the Kubernetes Learning Roadmap, your essential guide to mastering the revolutionary world of container orchestration.

Hit the Star! ⭐

To truly excel in the world of Kubernetes, you need a solid foundation in Linux. Linux training is essential for understanding the underlying system and effectively managing your Kubernetes cluster.

With Linux training, you’ll learn the ins and outs of the operating system that powers Kubernetes. You’ll gain knowledge in important concepts like networking, file systems, and security. This knowledge is crucial for effectively deploying, scaling, and managing your Kubernetes environment.

Understanding concepts like load balancing, proxy servers, and software-defined networking will help you optimize your Kubernetes cluster’s performance. You’ll also learn about important technologies like Transport Layer Security (TLS) and the Domain Name System (DNS), which are essential for securing and routing traffic within your cluster.

With Linux training, you’ll also gain valuable experience working with cloud computing platforms like Amazon Web Services (AWS) and Microsoft Azure. These platforms offer robust support for Kubernetes and understanding how to deploy and manage your cluster on these platforms is a valuable skill.

Learning YAML, the configuration language used in Kubernetes, is another crucial skill to develop. YAML allows you to define the desired state of your cluster and manage your Kubernetes resources effectively. With YAML, you can easily create and update deployments, services, and other components of your Kubernetes environment.

By taking Linux training, you’ll gain the knowledge and skills needed to navigate the complex world of Kubernetes. The Linux Foundation and Cloud Native Computing Foundation offer excellent resources and training programs to help you on your learning journey. Don’t miss out on the opportunity to become a skilled Kubernetes engineer and take your career to new heights. Hit the star and start your Linux training today! ⭐

Kubernetes Certification Coupon 🎉

Looking to get certified in Kubernetes? 🎉 Don’t miss out on our exclusive Kubernetes Certification Coupon! With this coupon, you can save on Linux training and fast-track your way to becoming a certified Kubernetes expert.

Kubernetes is a powerful container orchestration platform that is widely used in cloud computing and DevOps environments. By obtaining a Kubernetes certification, you can demonstrate your expertise in managing and deploying containerized applications using this popular technology.

Our Kubernetes Learning Roadmap provides a step-by-step guide to help you navigate the learning process. Whether you’re a beginner or already have some experience with Kubernetes, this roadmap will help you build a solid foundation and advance your skills.

The Linux training courses recommended in this roadmap are carefully selected to provide comprehensive coverage of Kubernetes concepts and hands-on experience. You’ll learn about important topics such as containerization, deployment, scaling, and load balancing. Additionally, you’ll gain knowledge on essential tools and technologies like NoSQL databases, proxy servers, and software-defined networking.

With your Kubernetes certification, you’ll open up new opportunities in the IT industry. Many companies, including major cloud providers like Amazon Web Services and Microsoft Azure, are actively seeking professionals with Kubernetes skills. By joining the ranks of certified Kubernetes experts, you’ll position yourself as a highly desirable candidate for job roles that require expertise in containerization and cloud-native technologies.

Don’t miss out on this exclusive Kubernetes Certification Coupon! Take advantage of this offer and start your journey towards becoming a certified Kubernetes professional today.

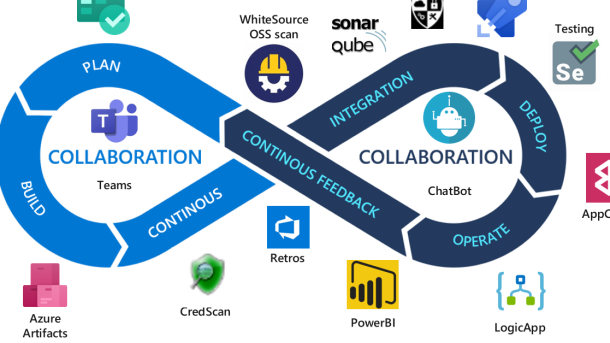

Kubernetes Architecture

The control plane is responsible for managing the overall cluster and includes components such as the **API server**, **scheduler**, **controller manager**, and **etcd**. These components work together to ensure that the cluster is running smoothly, handling tasks such as resource allocation, scheduling, and maintaining the desired state of the cluster.

The data plane, on the other hand, is responsible for running and managing the actual containers. It includes components such as **kubelet** and **kube-proxy**, which run on each node in the cluster. The kubelet is responsible for communicating with the control plane, managing the containers, and ensuring that they are in the desired state. The kube-proxy, on the other hand, handles network routing and load balancing, ensuring that traffic is properly routed to the correct containers.

Understanding the architecture of Kubernetes is vital for anyone looking to work with containerized applications. It provides a solid foundation for managing and scaling applications in a distributed environment. By familiarizing yourself with the various components and how they interact, you’ll be better equipped to troubleshoot issues, optimize performance, and make informed decisions regarding your infrastructure.

To learn more about Kubernetes architecture, consider taking Linux training courses that cover topics such as containerization, networking, and distributed systems. These courses will provide you with the knowledge and skills needed to effectively work with Kubernetes and leverage its full potential in your projects. With the rapidly growing adoption of containers and cloud-native technologies, investing in Kubernetes training is a wise decision that will open up new career opportunities in the field of DevOps and cloud engineering.

$1000+ Free Cloud Credits to Launch Clusters

Start by familiarizing yourself with important concepts such as NoSQL, load balancing, proxy servers, and databases. Understanding these fundamentals will help you navigate the world of Kubernetes more effectively.

Learn about Transport Layer Security (TLS), APIs, Domain Name System (DNS), and the OSI model to gain a deeper understanding of how Kubernetes interacts with the network.

Mastering concepts like IP addresses, user authentication, and classless inter-domain routing (CIDR) will enhance your ability to configure and secure Kubernetes clusters.

Explore libraries, cloud-native technologies, overlay networks, and command-line interfaces (CLIs) to build a solid foundation for managing Kubernetes environments.

Get hands-on experience with configuration files, information storage and retrieval, and runtime systems to sharpen your skills as a Kubernetes practitioner.

Understand the importance of nodes, serialization, key-value databases, and computer file management in the context of Kubernetes.

Utilize technologies like Scratch, sales promotions, high-level architectures, and backups to optimize your Kubernetes deployments.

Enhance your knowledge of credentials, GitHub, network address translation (NAT), and the Linux Foundation to become a well-rounded Kubernetes engineer.

Embrace the learning curve associated with complex systems, markup languages, and Linode as you dive deeper into Kubernetes.

Gain a comprehensive understanding of clients, high availability, research methodologies, programming languages, and server engines to excel in Kubernetes management.

With $1000+ free cloud credits, you have the opportunity to experiment and apply your knowledge in a real-world environment. Take advantage of this offer and start your Kubernetes learning roadmap today.

Understand KubeConfig File

KubeConfig File is a crucial component in Kubernetes that allows you to authenticate and access clusters. It is a configuration file that contains information about clusters, users, and contexts. Understanding the KubeConfig file is essential for managing and interacting with Kubernetes clusters effectively.

The KubeConfig file is typically located in the ~/.kube directory and is used by the Kubernetes command-line tool, kubectl. It contains details such as the cluster’s API server address, the user’s credentials, and the context to use.

To configure kubectl to use a specific KubeConfig file, you can set the KUBECONFIG environment variable or use the –kubeconfig flag with the kubectl command. This flexibility allows you to switch between different clusters and contexts easily.

In the KubeConfig file, you define clusters by specifying the cluster’s name, server address, and certificate authority. Users are defined with their authentication credentials, including client certificates, client keys, or authentication tokens. Contexts are used to associate a cluster and user, allowing you to switch between different configurations.

Understanding the structure and contents of the KubeConfig file is vital for managing access to your Kubernetes clusters. It enables you to authenticate with the cluster, interact with the API server, and perform various operations like deploying applications, scaling resources, and managing configurations.

By gaining proficiency in managing the KubeConfig file, you can effectively navigate the Kubernetes learning roadmap. It is an essential skill for anyone interested in Linux training and exploring the world of container orchestration.

References: Kubernetes, KubeConfig file, kubectl, clusters, users, contexts, API server, authentication, Linux training.

Understand Kubernetes Objects And Resources

| Object |

Description |

Example |

| Pod |

The smallest and simplest unit in the Kubernetes object model, representing a single instance of a running process in a cluster. |

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: my-container

image: nginx |

| Deployment |

A higher-level abstraction that manages and scales a set of replicated pods, providing declarative updates to the desired state. |

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-deployment

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-container

image: nginx |

| Service |

An abstract way to expose an application running on a set of pods, enabling network access and load balancing. |

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: my-app

ports:

- protocol: TCP

port: 80

targetPort: 80 |

| ConfigMap |

A configuration store that separates configuration from container image, allowing easy updates without rebuilding the image. |

apiVersion: v1

kind: ConfigMap

metadata:

name: my-configmap

data:

my-key: my-value |

| Secret |

A secure way to store sensitive information, such as passwords or API keys, separately from the container image. |

apiVersion: v1

kind: Secret

metadata:

name: my-secret

type: Opaque

data:

username: dXNlcm5hbWU=

password: cGFzc3dvcmQ= |

Learn About Pod Dependent Objects

Pod dependent objects are an important concept in Kubernetes. These objects are closely tied to pods, which are the smallest and simplest unit in the Kubernetes ecosystem. By understanding pod dependent objects, you can effectively manage and deploy your applications on a Kubernetes cluster.

One example of a pod dependent object is a service. A service is used to expose a group of pods to other services or external users. It acts as a load balancer, distributing incoming traffic to the pods behind it. This is essential for creating a scalable and resilient application architecture.

Another important pod dependent object is a persistent volume claim (PVC). PVCs are used to request storage resources from a storage provider, such as a cloud provider or a network-attached storage system. By using PVCs, you can ensure that your application’s data is persisted even if the pods are deleted or rescheduled.

In addition to services and PVCs, there are other pod dependent objects that you may encounter in your Kubernetes journey. These include configmaps, secrets, and ingresses. Configmaps are used to store configuration data that can be consumed by pods. Secrets, on the other hand, are used to store sensitive information such as passwords or API tokens. Ingresses are used to define rules for routing external traffic to services within the cluster.

Understanding pod dependent objects is crucial for effectively managing your Kubernetes applications. By leveraging these objects, you can create scalable, reliable, and secure applications on a Kubernetes cluster. So, as you embark on your journey to learn Kubernetes, make sure to dive deep into the world of pod dependent objects. They will be invaluable in your quest to become a Kubernetes expert.

Learn About Services

When diving into the world of Kubernetes, it’s important to understand the various services that play a crucial role in its functionality. Services such as load balancing, proxy servers, and databases are essential components of a Kubernetes cluster.

Load balancing ensures that traffic is distributed evenly across multiple nodes in a cluster, optimizing performance and preventing any single node from getting overwhelmed. Proxy servers act as intermediaries between clients and servers, handling requests and forwarding them to the appropriate destinations.

Databases store and manage data, allowing applications to access and manipulate information efficiently. Understanding how databases integrate with Kubernetes is vital for developing scalable and resilient applications.

In addition to these core services, it’s also beneficial to have knowledge of concepts like overlay networks, command-line interfaces, and configuration files. Overlay networks enable communication between different nodes in a cluster, while command-line interfaces provide a powerful tool for interacting with Kubernetes.

Configuration files contain settings and parameters that define how Kubernetes operates, allowing for customization and fine-tuning. Familiarity with these files is essential for configuring and managing a Kubernetes environment effectively.

Other important services to consider include key-value databases, backup solutions, and high availability architectures. Key-value databases offer a flexible data storage model, while backup solutions ensure the safety and integrity of critical data. High availability architectures provide redundancy and fault tolerance, minimizing downtime and ensuring continuous operations.

To enhance your learning experience, there are many resources available, such as the Cloud Native Computing Foundation, which offers educational materials and certifications. Online platforms like GitHub also provide repositories of code and documentation, allowing you to explore real-world examples and collaborate with the community.

Learn to Implement Network Policy

Implementing network policy is a crucial aspect of managing a Kubernetes cluster. With the right network policies in place, you can ensure secure and efficient communication between different components of your cluster.

To start implementing network policy in Kubernetes, you need to understand the concept of network policy and how it fits into the overall architecture of your cluster. Network policies define rules and restrictions for inbound and outbound network traffic within the cluster. They allow you to control access to your applications and services and protect them from unauthorized access.

One important aspect of network policy is understanding the different components involved, such as **pods**, **services**, and **endpoints**. Pods are the basic building blocks of your application, services provide a stable network endpoint, and endpoints are the actual IP addresses that pods are running on.

When creating network policies, you can use different match criteria, such as **IP blocks**, **port numbers**, and **protocol types**. You can also define **ingress** and **egress** rules to control inbound and outbound traffic.

To implement network policy in Kubernetes, you can use a variety of tools and techniques. One common approach is to use a **network plugin** that provides network policy enforcement. Examples of popular network plugins include Calico, Cilium, and Flannel.

When implementing network policy, it’s important to test and validate your policies to ensure they are working as expected. You can use tools like **kubectl** to apply and manage network policies, and **kubectl describe** to get detailed information about the policies in your cluster.

Learn About Securing Kubernetes Cluster

Securing your Kubernetes cluster is crucial to protect your applications and data. By following best practices, you can minimize the risk of unauthorized access and potential security breaches.

To start, ensure that you have a strong authentication and authorization system in place. Implement role-based access control (RBAC) to define and manage user permissions within your cluster. It’s also important to regularly update and patch your system to address any security vulnerabilities that may arise.

Additionally, consider using network policies to control traffic flow between your pods and limit communication to only necessary services. This can help prevent unauthorized access and limit the impact of any potential breaches.

Another key aspect of securing your cluster is protecting its control plane. Use secure communication channels, such as HTTPS, for API server communication. Implement certificate-based authentication and encryption to secure the communication between components.

Monitoring and logging are essential for detecting and responding to security incidents. Set up centralized logging and monitoring solutions to track and analyze cluster activity. This can help you identify any suspicious behavior and take appropriate action.

Finally, regularly backup your cluster data to ensure that you can recover in the event of a security incident or data loss. Implement a robust backup strategy that includes both system-level backups and application-level backups.

Securing your Kubernetes cluster may require some additional learning and training, especially in areas such as network security and authentication mechanisms. Consider taking Linux training courses that cover these topics and provide hands-on experience with securing Kubernetes clusters.