Welcome to the world of blockchain programming! In this article, we will explore the ins and outs of blockchain technology and how you can develop your skills in this rapidly growing field.

Blockchain Programming Essentials

Blockchain Programming Essentials course covers the fundamental aspects of blockchain technology programming. Students will learn about the basics of blockchain, how it works, and its various applications. The course will delve into the development of smart contracts, decentralized applications, and cryptocurrencies. By the end of the course, participants will have a solid understanding of blockchain programming and be equipped with the skills needed to pursue a career in the blockchain industry.

Exploring Blockchain Courses

Looking to delve into the world of blockchain? Consider enrolling in a Blockchain Programming Course to gain the skills needed for this innovative field. Learn about distributed computing, cryptocurrency, and cybersecurity to carve out a niche in the industry. With a focus on computer programming and software engineering, you will be equipped to tackle real-world challenges in blockchain development.

Explore the intricacies of smart contracts and data mining to understand the potential of blockchain technology. Develop a strong foundation in computer science and network security to excel in this rapidly growing field.

Blockchain Specialization Options

Blockchain Specialization Options in a **Blockchain Programming Course** include learning about **smart contracts**, **distributed computing**, **cybersecurity**, and **cryptocurrency**. These courses delve into the intricacies of blockchain technology, providing students with a comprehensive understanding of how to develop secure and efficient blockchain applications. Understanding concepts such as **Hyperledger** and **cryptographic algorithms** is essential for mastering blockchain development. By specializing in blockchain programming, individuals can gain valuable skills that are in high demand in industries such as fintech, computer security, and software engineering. Start your journey towards becoming a blockchain expert by enrolling in a specialized course today.

Understanding Bitcoin and Cryptocurrency Technologies

Learn the fundamentals of **Blockchain** and cryptocurrency technologies through a comprehensive **programming course**. Gain practical knowledge in **Bitcoin** and other digital currencies, as well as the underlying **technology** that powers them. Understand the importance of **security**, **cryptography**, and **risk management** in the world of **Fintech**. Discover the potential for **innovation** and **entrepreneurship** in this rapidly growing field.

Develop your skills in **computer programming** and **algorithm** design to create **smart contracts** and secure **networks**. Take the first step towards a career in **Blockchain development** with this valuable course.

Benefits of Learning Blockchain

Learning blockchain offers numerous benefits, including enhanced computer security and the ability to create secure, decentralized systems. Understanding blockchain technology can also lead to opportunities in machine learning and entrepreneurship. By mastering blockchain, individuals can better protect against cyberattacks, utilize cryptography for secure transactions, and ensure regulatory compliance. Additionally, blockchain knowledge can improve risk management strategies and provide insights into behavioral economics.

Blockchain Curriculum Overview

The Blockchain Curriculum Overview covers essential topics such as smart contracts, data mining, and security engineering. Students will delve into programming languages, algorithms, and computer networks. The course emphasizes problem-solving skills and critical thinking in the context of blockchain technology. With a focus on innovation and strategy, learners will explore the application of blockchain in various industries. The curriculum also includes market research, contract management, and regulatory compliance.

Developing Blockchain Skills

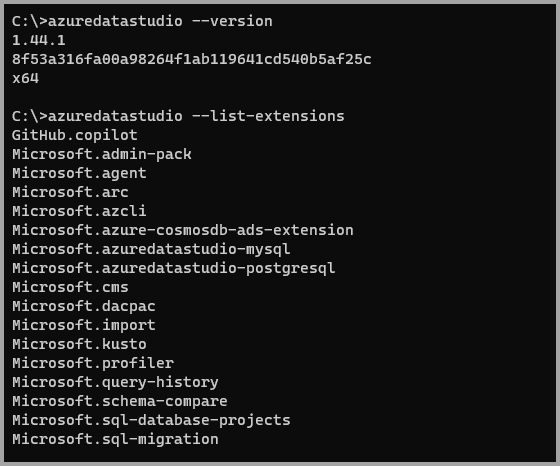

If you are looking to develop your **Blockchain skills**, taking a **Blockchain Programming Course** can be a great way to start. These courses typically cover topics such as **Smart Contracts**, **Decentralized Applications**, and **Blockchain Security**. By learning about these concepts, you can gain a deeper understanding of how Blockchain technology works and how you can use it in your projects. Additionally, you will also learn about **Hyperledger**, a popular **Blockchain platform** used in various industries.

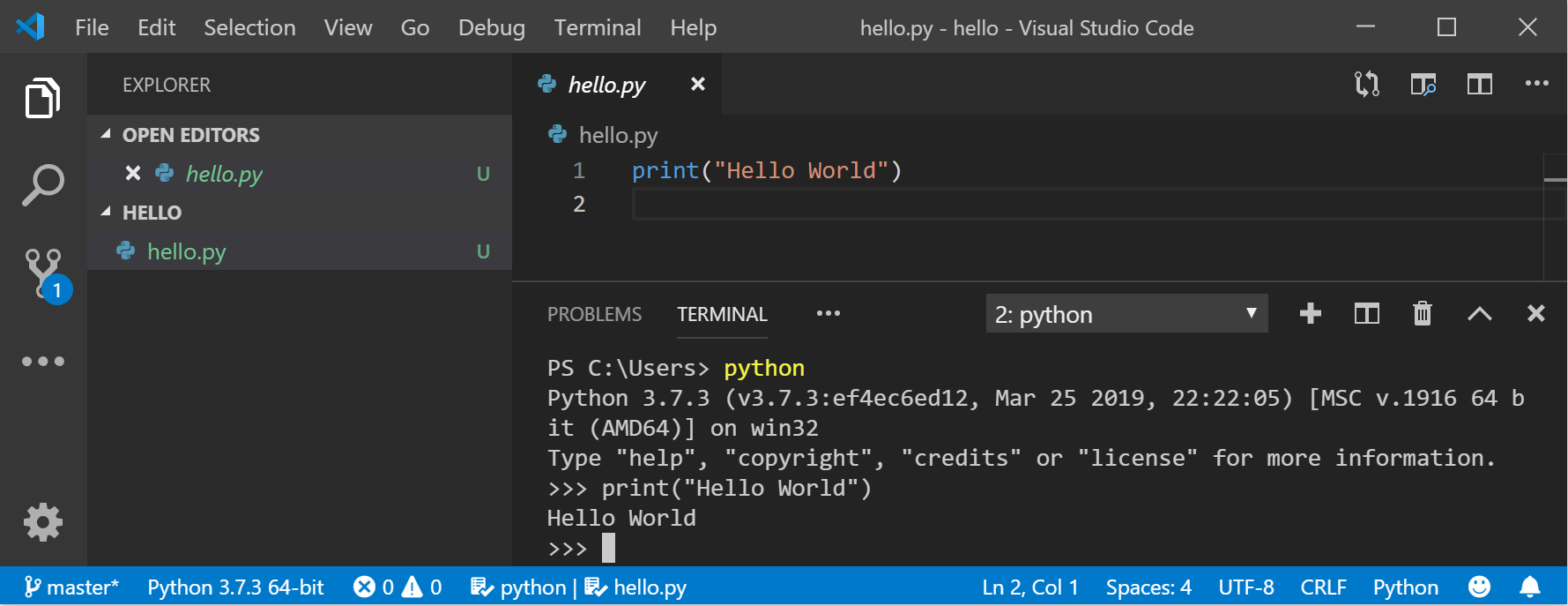

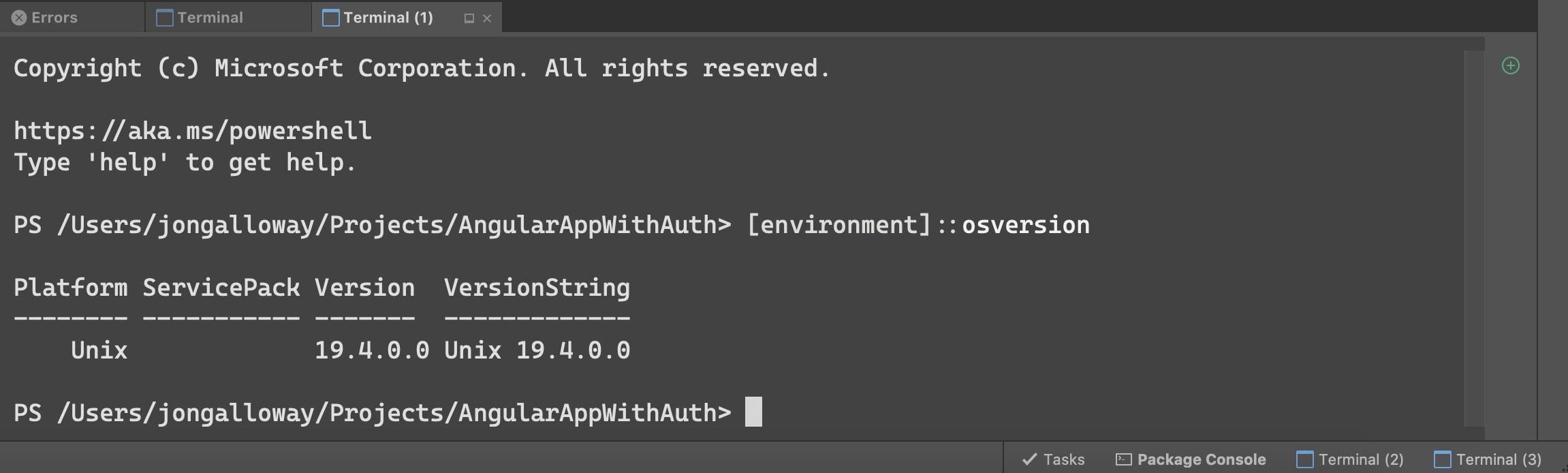

Taking a **Linux training** course alongside a **Blockchain Programming Course** can also be beneficial, as **Linux** is commonly used in **Blockchain development**. By gaining proficiency in **Linux**, you can navigate through **Blockchain systems** more effectively and troubleshoot any issues that may arise. This combination of skills can make you a valuable asset in the **Blockchain industry** and open up new **career opportunities** for you.

Exploring Blockchain Fundamentals

Blockchain fundamentals are essential for anyone looking to delve into blockchain programming. Understanding concepts such as decentralized systems, cryptography, and smart contracts is crucial for success in this field. A blockchain programming course will cover these fundamentals in depth, giving you the knowledge and skills needed to develop blockchain applications.

By learning about blockchain fundamentals, you will be equipped to tackle real-world problems in areas such as finance, supply chain management, and healthcare. This knowledge will also prepare you to work with cutting-edge technologies like machine learning and network security. As you progress through the course, you will gain practical experience in building and deploying blockchain applications.

Blockchain Programming Courses

Enroll in Blockchain Programming Courses to gain valuable skills in this rapidly growing field. Learn how to develop secure and efficient blockchain applications using the latest technologies and programming languages. These courses will teach you how to create and deploy smart contracts, conduct market research, and analyze blockchain data. With a focus on problem-solving and critical thinking, you will be prepared to tackle the challenges of blockchain development.

Advance your career in blockchain technology by mastering the tools and techniques needed to succeed in this innovative industry.

Blockchain Specialization Curriculum

The curriculum covers topics such as smart contracts, cryptocurrency, and decentralized applications. Students will also gain hands-on experience with programming languages commonly used in blockchain development, such as Solidity and Python.

By the end of the course, students will be equipped with the knowledge and skills needed to develop their own blockchain-based solutions and applications. This specialized curriculum is ideal for individuals looking to enter the rapidly growing field of blockchain technology.

Importance of Blockchain Education

Blockchain education is crucial in today’s rapidly evolving digital landscape. Understanding the fundamentals of blockchain technology can open up a world of opportunities in various industries. With the rise of cyberattacks and security threats, having a solid grasp of blockchain can help in securing systems and networks.

Learning about blockchain also provides insights into economics and market strategies, as well as enhancing problem-solving skills. By mastering blockchain programming languages and algorithms, individuals can develop innovative solutions and smart contracts. This knowledge is highly valuable in fields such as investment management and software development.

Organizations like IBM are actively seeking professionals with blockchain expertise, making it a lucrative skill to possess. Investing in blockchain education can lead to a successful career in technology, finance, or even leadership roles. Stay ahead of the curve by enrolling in a blockchain programming course today.