Discover the top network engineering courses that will take your technical skills to the next level.

Career Learning Paths in Network Engineering

Top Network Engineering Courses

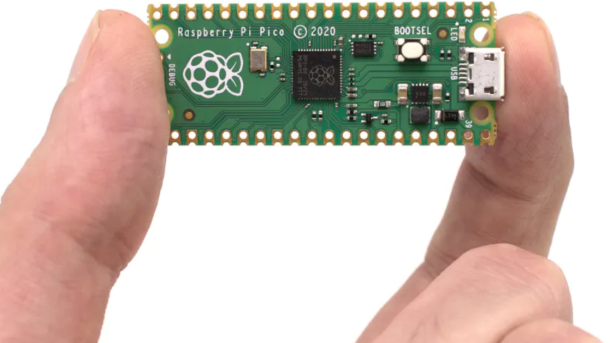

For those looking to pursue a career in network engineering, there are several **important** learning paths to consider. One of the key areas to focus on is mastering **Linux**, as it is widely used in the industry for networking tasks.

Courses that cover **Linux** essentials, shell scripting, and system administration are highly recommended. Additionally, gaining expertise in **network security** is crucial in today’s technology landscape.

Courses that cover topics such as firewall configuration, encryption techniques, and intrusion detection systems are valuable for aspiring network engineers. Lastly, courses on **Cisco** networking technologies can provide a strong foundation in routing, switching, and network troubleshooting.

By completing these courses, individuals can build a solid skill set in network engineering and increase their career prospects in the field. Whether you are looking to work in technical support, data centers, or IT infrastructure, these courses can help you achieve your career goals.

Stories from the Coursera Community in Network Engineering

One student shared how a course on network planning and design helped them secure a job at a leading IT infrastructure company. Another learner praised the hands-on experience in Cisco networking, which prepared them for a successful career in network administration. The practical skills acquired through these courses have empowered individuals to excel in the fast-paced world of technology management.

By enrolling in Linux training courses, students can gain a deeper understanding of communication protocols and network switches. These courses provide a solid foundation for those looking to specialize in areas such as wireless networks or data center management. The flexibility of online learning on Coursera allows individuals to upskill or reskill at their own pace, regardless of their background in information technology.

Whether you’re a seasoned network engineer or a newcomer to the field, Coursera offers a range of courses to help you achieve your career goals. Join the Coursera community in network engineering today and unlock your potential in the ever-evolving world of technology.

Transform Your Resume with Online Degrees

Enhance your resume by completing Top Network Engineering Courses online. These courses will provide you with the skills and knowledge needed to succeed in the field of network engineering.

By taking these courses, you will learn about technical support, network planning and design, troubleshooting, and more. This will help you to excel in roles such as network administrator or engineer.

Additionally, these courses will cover topics such as cloud computing, computer security, and network security, giving you a well-rounded education in information technology management.

Completing these courses will not only help you to advance your career but also to stay current with the latest advancements in technology. Don’t wait, start your Linux training today and take the first step towards a successful career in network engineering.

Top Tasks for Network Engineers

| Task | Description |

|---|---|

| Designing and implementing network infrastructure | Creating network plans, selecting appropriate hardware and software, and setting up network configurations. |

| Monitoring network performance | Using network monitoring tools to analyze network traffic, identify bottlenecks, and optimize performance. |

| Troubleshooting network issues | Identifying and resolving network problems, such as connectivity issues, slow performance, and security breaches. |

| Implementing network security measures | Setting up firewalls, intrusion detection systems, and encryption protocols to protect network data and prevent cyber attacks. |

| Collaborating with other IT professionals | Working with system administrators, security analysts, and software developers to ensure seamless integration of network services. |

Reasons to Pursue a Career in Network Engineering

– Another reason to consider a career in network engineering is the opportunity for **advancement** and growth within the industry. With the rapid pace of technological advancements, network engineers have the chance to constantly learn and develop new skills to stay relevant in the field.

– Network engineering also offers the chance to work in a variety of industries, from **cloud computing** to data centers, giving professionals the opportunity to explore different areas of interest within the field.

– Additionally, network engineering can provide a sense of accomplishment as professionals play a crucial role in ensuring the smooth functioning of computer networks, which are essential for communication, data transfer, and overall business operations.

Average Salary for Network Engineers

Network Engineering Courses can provide valuable skills and knowledge in areas such as **computer network** design, **Internet Protocol** management, and **computer security**. These courses can help individuals become proficient in setting up and maintaining networks, as well as troubleshooting any issues that may arise.

By enrolling in **Linux training**, individuals can gain a solid foundation in **networking** principles and practices. Linux is widely used in the field of networking, making it a valuable skill to have for Network Engineers.

With the right training and certification, individuals can pursue various career paths in **network administration** or **network security**. These roles are in high demand as more businesses rely on interconnected systems for their daily operations.

Impact of Cisco Certifications on Careers

Cisco certifications can have a significant impact on one’s career trajectory in the field of network engineering. These certifications validate one’s skills and expertise in working with Cisco networking technologies, which are widely used in the industry. Employers often look for candidates with these certifications as they demonstrate a high level of knowledge and proficiency in networking.

Having Cisco certifications can open up new opportunities for career advancement and higher salary prospects. Many companies prefer to hire candidates who are Cisco certified as it reflects positively on their ability to handle complex network infrastructure. In addition, holding these certifications can also increase job security, as certified professionals are in high demand in the industry.

By completing Cisco certifications, individuals can gain a deeper understanding of **network engineering** concepts and technologies, such as **Data center** networking, **Wireless** networking, and **Network switch** configurations. This knowledge can be applied to real-world scenarios, enabling professionals to troubleshoot network issues, design network solutions, and implement network security measures effectively.

Explore Network Engineering Courses

Looking to enhance your skills in network engineering? Consider enrolling in some of the top courses available. Courses such as **Cisco Certified Network Associate (CCNA)** and **CompTIA Network+** provide a solid foundation in networking concepts and technologies.

For those interested in specializing in certain areas, courses like **Advanced Routing and Switching** or **Network Security** can help you develop expertise in specific areas of network engineering. These courses cover topics such as **firewalls**, **intrusion detection systems**, and **secure network design**.

If you’re looking to advance your career in network engineering, consider taking courses in **Linux system administration**. Linux is widely used in networking environments and having a strong understanding of Linux can be a valuable asset in this field.

By taking these courses, you can gain the knowledge and skills needed to design, implement, and manage complex network infrastructures. Whether you’re a seasoned network administrator looking to upskill or a newcomer to the field, investing in network engineering courses can help you achieve your goals in the IT industry.

Top Certifications for Network Engineering Careers

– Linux Professional Institute Certification (LPIC): A highly recognized certification in the industry, focusing on Linux system administration and networking skills.

– Cisco Certified Network Associate (CCNA): A foundational certification for network engineers, covering routing and switching, network security, and troubleshooting.

– CompTIA Network+: A vendor-neutral certification that validates essential networking skills, including configuring and managing networks, troubleshooting issues, and implementing security measures.

– These certifications are highly valued by employers in the network engineering field, showcasing your expertise and dedication to the profession.

– By obtaining these certifications, you can enhance your career opportunities and open doors to higher-paying positions in the industry.

– Consider pursuing these certifications to stay competitive in the ever-evolving field of network engineering and secure a successful career path.

Options for Advancing in Network Engineering

Looking to advance in network engineering? Consider taking Linux training courses to enhance your skills and knowledge in this field. Linux is widely used in networking and mastering it can open up new opportunities for your career.

Some popular network engineering courses that focus on Linux include “Linux Networking and Administration” and “Advanced Linux System Administration.” These courses cover topics such as network configuration, security, and troubleshooting, which are essential for network engineers.

By taking these courses, you will gain a deeper understanding of Linux-based networking technologies and be better equipped to manage complex computer networks. This will not only benefit your current role but also help you stand out in the competitive job market.

Investing in your education and skills is crucial for advancing in network engineering. Enroll in a Linux training course today and take your career to the next level.

Talk to Experts about Network Engineering Courses

Talk to **experts** in the field of **network engineering** to get valuable insights on the top **courses** available. These professionals can provide guidance on the best options based on your career goals and level of experience.

By consulting with experts, you can learn about **specialized** courses that focus on areas such as **Linux** training, **network security**, or **wireless networking**. These courses can help you develop **advanced** skills that are highly sought after in the industry.

Experts can also recommend **certification** programs that can enhance your **credentials** and make you more competitive in the job market. Additionally, they can provide information on **online** courses and **in-person** training options to suit your **schedule** and **learning** preferences.

Take advantage of the **knowledge** and **experience** of network engineering experts to ensure you choose the best courses that will **benefit** your career in the long run. Don’t hesitate to reach out and ask for their **advice** on finding the right **education** path for you.