Welcome to the world of Microservices: an innovative approach revolutionizing software development and architecture. In this primer, we delve into the fundamentals of Microservices, uncovering their benefits, challenges, and how they are reshaping the way we build and deploy applications. So, fasten your seatbelts as we embark on this exciting journey into the realm of Microservices!

Enabling rapid, frequent and reliable software delivery

Microservices are based on a component-based software engineering approach, where each service is built and deployed independently. This allows developers to focus on specific functionalities or business domains, making the codebase more manageable and easier to maintain.

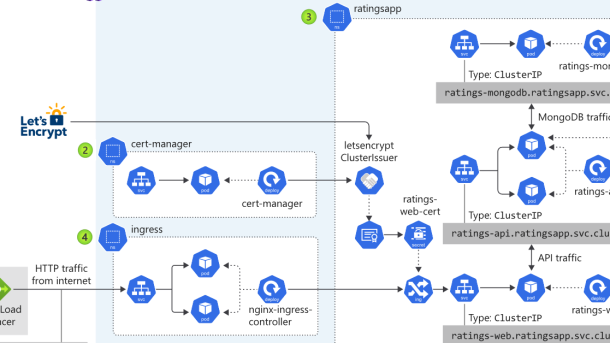

One of the key technologies that supports microservices is Kubernetes, an open-source container orchestration platform. Kubernetes simplifies the management and scaling of microservices, making it easier to deploy and maintain them in a distributed computing environment.

In the traditional software development process, making changes to a monolithic application can be risky and time-consuming. With microservices, developers can make changes to individual services without impacting the entire application. This reduces the risk of introducing bugs or causing downtime.

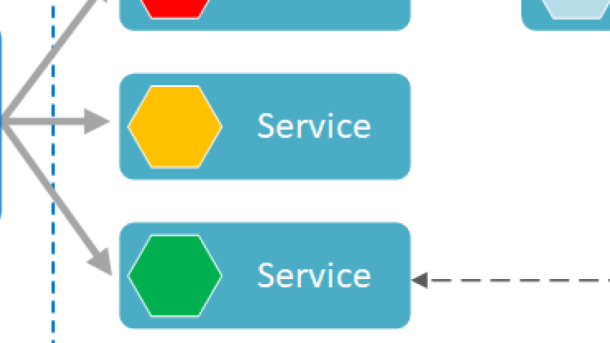

Communication between microservices is typically done through APIs, or application programming interfaces. APIs define how different services interact with each other, allowing them to exchange data and trigger actions. This enables better collaboration between development teams and facilitates the integration of different services and systems.

Microservices also enable organizations to take advantage of cloud technologies, such as Amazon Web Services. By deploying microservices in a cloud environment, organizations can scale their applications based on demand, improving network throughput and ensuring reliable performance.

Implementing microservices requires a shift in mindset and a focus on design and architecture. Developers need to think about how to break down their applications into smaller, loosely coupled services, and how to manage dependencies between them. This may involve refactoring existing code or adopting a pattern language for designing microservices.

Organizational changes are also necessary to fully embrace microservices. Cross-functional teams, composed of developers, testers, and operations personnel, need to work together closely to build and deploy microservices. This requires a cultural shift towards DevOps practices, where development and operations teams collaborate throughout the software development lifecycle.

Migrating from a monolith to microservices

In a monolithic architecture, the entire application is built as a single, cohesive unit. This can lead to challenges in terms of scalability, maintainability, and agility. On the other hand, microservices break down the application into small, independent services that communicate with each other through APIs. This allows for greater flexibility, scalability, and easier deployment.

One of the main benefits of migrating to microservices is the ability to adopt a DevOps approach. This involves bringing together development and operations teams to work collaboratively throughout the software development process. With microservices, teams can focus on developing and deploying smaller, more manageable components. This leads to faster innovation, improved communication, and better overall efficiency.

Another advantage of microservices is the ability to leverage cloud services such as Amazon Web Services. By using these services, you can offload the management of infrastructure and focus on building and deploying your application. This can greatly reduce the time to market and allow for more rapid experimentation.

However, it is important to note that migrating from a monolith to microservices is not without its challenges. One of the main challenges is the complexity that comes with distributed computing. With a monolithic architecture, everything is contained within a single codebase. In a microservices architecture, you have multiple codebases that need to work together. This requires careful design and implementation to ensure that the services can communicate effectively and efficiently.

Code refactoring is another important consideration when migrating to microservices. This involves restructuring the codebase to align with the new architecture. This can be a time-consuming process, but it is necessary to ensure that the services are decoupled and can be developed and deployed independently.

Additionally, it is important to consider the impact on the organization when migrating to microservices. This includes the skills and expertise required to develop and maintain microservices, as well as the potential impact on existing processes and workflows.

Microservices architecture and design characteristics

Microservices architecture is a design approach that focuses on building software applications as a collection of small, independent services that work together to deliver a larger application. This approach promotes modularity, scalability, and agility, making it ideal for organizations looking to innovate and deliver applications quickly to market.

One of the key characteristics of microservices architecture is its component-based software engineering approach. Each individual service within the architecture is developed and maintained independently, allowing for greater flexibility and easier code refactoring. This means that teams can work on different services simultaneously, reducing the time to market and increasing overall development speed.

Another important aspect of microservices architecture is the use of APIs to define the interfaces between services. APIs allow services to communicate with each other and exchange data, ensuring seamless integration and interoperability. This also enables the use of different programming languages and technologies within the architecture, depending on the specific requirements of each service.

By breaking down applications into smaller, focused services, microservices architecture helps manage complexity more effectively. Each service is responsible for a specific functionality or business domain, allowing for better organization and maintainability. This also enables teams to work in cross-functional teams, where each team is dedicated to a specific service or subdomain.

The use of a message broker is another important characteristic of microservices architecture. A message broker acts as a central hub for communication between services, allowing for asynchronous communication and decoupling. This helps improve network throughput and reduces the risk of bottlenecks or failures.

Implementing microservices architecture requires careful planning and consideration. It is important to identify the right boundaries for services, ensuring that each service is focused and independent. This can be achieved by using architectural styles such as the strangler fig pattern, where new functionality is gradually added to the architecture while existing monolithic components are gradually replaced.

Hybrid and modular application architectures

One key aspect of hybrid architectures is the use of APIs to define the interfaces between different components of the system. APIs enable different parts of the application to communicate and interact with each other, creating a cohesive and integrated system. By decoupling components through APIs, organizations can easily replace or upgrade individual parts of the system without affecting the entire application.

Another important concept in hybrid architectures is the use of modular application software. Modular software is divided into smaller, independent modules that can be developed, tested, and deployed separately. This modular approach allows for faster development cycles, improved maintainability, and easier scalability. It also enables organizations to take advantage of new technologies and innovations without disrupting the entire system.

Hybrid architectures also consider the underlying infrastructure on which the application runs. By leveraging the power of cloud technologies and implementation patterns such as containerization, organizations can easily scale their applications based on demand. This flexibility allows for efficient resource utilization and cost savings.

However, it is important to be mindful of potential anti-patterns and bottlenecks that can arise in hybrid architectures. For example, improper API design or inefficient communication between services can lead to performance issues and system failures. It is crucial to have a well-defined architectural style and cross-functional team collaboration to ensure smooth integration and operation of the system.

One approach to transitioning from a monolithic architecture to a hybrid architecture is the strangler fig pattern. This pattern involves gradually replacing or refactoring parts of the monolithic application with microservices, while keeping the overall system functional. This allows organizations to incrementally adopt microservices without disrupting the existing functionality.

Microservices and APIs

Microservices can be defined as small, independent, and loosely coupled services that work together to form an application. Each microservice is responsible for a specific functionality or business capability. This architectural style allows teams to work on different parts of the application simultaneously, promoting faster development and easier maintenance.

One of the key advantages of microservices is their ability to foster innovation. With each microservice being developed and deployed independently, teams have the freedom to experiment and introduce new features without affecting the entire application. This promotes agility and allows for faster time-to-market.

APIs (Application Programming Interfaces) serve as the interface between different software components or systems. They define how different parts of an application can interact with each other. APIs enable seamless communication and data exchange, allowing developers to leverage the functionality of existing services or systems.

By using APIs, developers can build applications that are modular and scalable. They can integrate third-party services or components, saving time and effort in development. APIs also enable the creation of cross-functional teams, where different teams can work on different parts of the application, leveraging the power of specialization.

Microservices and APIs go hand in hand. Microservices expose their functionality through APIs, allowing other microservices or external systems to consume their services. This decoupling of services through APIs enables flexibility and reusability, as each microservice can be independently scaled, updated, or replaced without affecting the entire application.

However, it is important to note that while microservices and APIs offer numerous benefits, they also come with certain challenges. A poorly designed API or a bottleneck in the microservices architecture can lead to performance issues or failures. It is crucial to carefully plan and design the architecture, keeping in mind factors such as scalability, fault tolerance, and security.

Microservices and containers

Microservices is an architectural approach where an application is divided into small, independent services that can be developed, deployed, and scaled independently. Each microservice focuses on a specific business capability and can communicate with other microservices through well-defined interfaces.

Containers, on the other hand, provide a lightweight and portable environment for running microservices. They encapsulate the application and its dependencies, making it easy to package, distribute, and run the application consistently across different environments.

By adopting microservices and containers, organizations can achieve greater agility, scalability, and resilience in their software development processes. However, it is important to understand the key considerations and challenges associated with these concepts.

One important consideration is the need for a cross-functional team that includes developers, operations personnel, and other stakeholders. This team should work together to design, develop, and deploy microservices effectively. Collaboration and communication are crucial to ensure that the microservices are aligned with the overall business goals and requirements.

Another important aspect is the definition of microservices. Each microservice should have a clear and well-defined responsibility, and should be designed to be loosely coupled with other microservices. This allows for independent development and deployment of each microservice, which can greatly enhance the overall agility and scalability of the application.

However, it is also important to be aware of potential anti-patterns that can arise when implementing microservices. For example, bottlenecks can occur if a single microservice becomes a performance or scalability constraint for the entire application. It is important to design the microservices in such a way that they can be scaled independently and can handle the expected workload.

In addition, failure is inevitable in any distributed system, and it is important to design the microservices to be resilient and able to handle failures gracefully. This can be achieved through techniques such as circuit breaking and retry mechanisms.

From a technology perspective, Linux is a popular choice for running microservices and containers due to its stability, performance, and extensive tooling support. Therefore, it would be beneficial to invest in Linux training to gain a deeper understanding of the platform and its capabilities.

Challenges of a microservices architecture

While a microservices architecture offers numerous benefits, it also presents its fair share of challenges. These challenges can arise from various aspects of the architecture, including its complexity, communication between services, and the management of data.

One of the key challenges of a microservices architecture is the increased complexity it brings compared to a monolithic architecture. With multiple services interacting with each other, it can be challenging to understand the overall flow of the application and troubleshoot issues. It requires a thorough understanding of each service and their dependencies, which can be time-consuming and challenging to maintain as the number of services grows.

Another challenge is communication between services. In a microservices architecture, services need to communicate with each other through APIs or message queues. This introduces potential points of failure and bottlenecks in the system. If one service goes down or experiences issues, it can affect the functionality of other services that depend on it. Proper error handling and fault tolerance mechanisms need to be implemented to ensure the system can gracefully handle such failures.

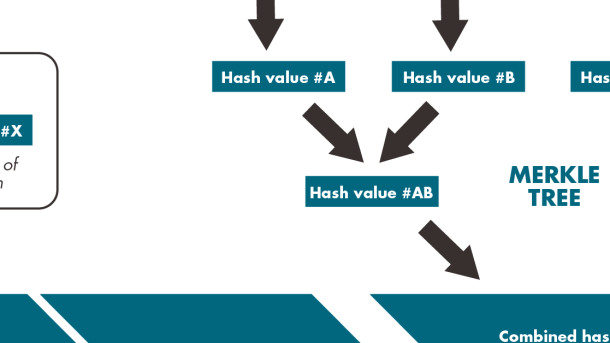

Managing data in a microservices architecture can also be challenging. Each service may have its own database or data store, leading to the problem of data consistency. Coordinating updates and ensuring data integrity across services requires careful planning and implementation. Additionally, data duplication can become an issue, as multiple services may need access to the same data. Strategies such as event sourcing and eventual consistency can be used to address these challenges.

Scaling a microservices architecture can be more complex compared to a monolithic architecture. Each service may need to be scaled independently based on its specific requirements, which can be challenging to manage. Load balancing and auto-scaling mechanisms need to be in place to handle varying levels of traffic to different services.

Furthermore, the cost of implementing and maintaining a microservices architecture can be higher compared to a monolithic architecture. With multiple services and infrastructure components, the overall technology stack becomes more complex, requiring additional resources for development, deployment, and monitoring. It is essential to carefully evaluate the benefits and costs before deciding to adopt a microservices architecture.

Red Hat’s leadership in microservices architecture

Red Hat, a leading provider of open source solutions, has established itself as a leader in microservices architecture. Microservices architecture, also known as the microservices style, is an approach to developing software applications as a collection of small, independent services that work together to deliver a larger application. This architecture allows for greater flexibility, scalability, and agility compared to traditional monolithic applications.

One of the key advantages of Red Hat’s leadership in microservices architecture is its expertise in Linux. Linux is the operating system of choice for many microservices-based applications due to its stability, security, and performance. Red Hat offers comprehensive Linux training programs that can help developers gain the necessary skills to build and manage microservices-based applications on Linux.

In addition to Linux expertise, Red Hat has also developed a range of tools and technologies specifically designed for microservices architecture. One such tool is OpenShift, a container application platform that simplifies the deployment and management of microservices-based applications. OpenShift provides a scalable and reliable infrastructure for running containers, enabling developers to easily build, deploy, and scale their microservices applications.

Another area where Red Hat excels in microservices architecture is its adoption of industry-standard patterns and practices. Microservices architecture relies on a pattern language, which is a set of design patterns and principles that guide the development of microservices-based applications. Red Hat has contributed to the development of these patterns and practices, ensuring that its customers can build robust and scalable microservices applications using industry best practices.

Furthermore, Red Hat understands the importance of collaboration and integration in microservices architecture. Microservices-based applications often consist of multiple services that need to communicate with each other. Red Hat provides tools and technologies that facilitate seamless integration between these services, enabling developers to build complex and interconnected applications.