Welcome to a comprehensive guide on navigating the Linux command line for beginners. Whether you’re new to the world of coding or looking to expand your skills, this tutorial will help you master the basics of the command line interface.

Introduction to Linux Command Line

In this section, you will learn the basics of the Linux Command Line. The command-line interface allows you to interact directly with the operating system using text commands. This is different from graphical user interfaces like Microsoft Windows or MacOS, which rely on mouse clicks and icons.

Understanding how to navigate the file system, run applications, and manage files using the command line is essential for anyone working with Linux. By learning these skills, you will gain a deeper understanding of how the system works, and be able to perform tasks more efficiently.

Whether you are a beginner or looking to expand your knowledge, mastering the command line is a valuable skill for anyone working with Linux. Take the first step towards becoming a Linux power user by diving into these tutorials.

History and Rise of Linux

Linux, the open-source operating system, was created by Linus Torvalds in 1991. It was inspired by the Unix operating system and designed to be a free alternative to proprietary software like Microsoft Windows and MacOS. Linux gained popularity due to its stability, security, and flexibility. Its command-line interface allows users to interact with the system directly, giving them more control over their computing environment. Understanding the Linux command line is essential for system administrators, developers, and anyone looking to dive deeper into technology.

By learning the basics of navigating the file system, managing permissions, and running applications from the command line, beginners can unlock the full potential of Linux.

Components of Linux System

The **components** of a Linux system include the **kernel**, **shell**, **commands**, **utilities**, and **libraries**. The **kernel** is the core of the operating system, while the **shell** is the interface through which users interact with the system. **Commands** are used to perform specific tasks, and **utilities** are programs that help users manage the system. **Libraries** contain code that can be reused by applications.

Understanding these components is essential for navigating the **Linux command line** effectively. By mastering the **commands** and **utilities** available, users can perform a wide range of tasks, from managing files and directories to configuring system settings. Learning to work with **libraries** can also help users develop their own **applications**.

Linux Distributions Overview

Linux distributions are different versions of the Linux operating system, each with its own set of features and characteristics. Some popular distributions include Ubuntu, Fedora, and CentOS.

Each distro has its own package management system, which is used to install, update, and remove software. For example, Ubuntu uses APT (Advanced Package Tool) while Fedora uses DNF (Dandified YUM).

When choosing a distribution, consider factors like ease of use, stability, and community support. It’s also important to check if the distro is compatible with your hardware and software requirements.

Learning how to navigate the command line interface is crucial for beginners in Linux. Commands like ls, cd, and mkdir are essential for managing files and directories. Practice using these commands in a terminal emulator to get comfortable with the command line.

Understanding the basics of Linux distributions and command line commands will set a solid foundation for further learning and exploring the world of Linux.

Choosing the Right Distribution

Some popular Linux distributions include Ubuntu, Fedora, and CentOS, each offering unique features and support options. Experiment with different distributions through virtual machines or live USBs before committing to one.

It’s important to choose a distribution that you feel comfortable working with, as this will ultimately impact your learning experience and success with Linux.

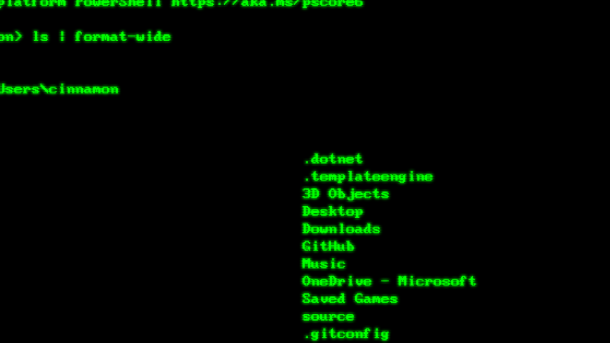

Working with Directories in Linux

Once you are in a directory, you can list the files and directories it contains using the **ls** command. Use **ls -l** to view more detailed information about the files, such as permissions and size. To create a new directory, you can use the **mkdir** command followed by the directory name.

File Management Commands in Linux

ls is used to list the contents of a directory, cd to change directories, and mkdir to create new directories.

To copy files, you can use cp, and to move or rename them, you can use mv.

To remove files or directories, you can use the rm command.

Understanding and utilizing these basic file management commands will help beginners get comfortable with using the Linux command line interface.

Working with File Contents

To work with file contents in Linux, you can use commands like cat, grep, and less to view, search, and navigate through files. The cat command displays the entire contents of a file, while grep helps you search for specific text within files. Less allows you to scroll through files step by step. You can also use the head and tail commands to display the beginning or end of a file, respectively. These commands are essential for manipulating and analyzing file contents efficiently on the command line.

Essential Linux Commands

In your journey to mastering Linux, understanding these essential commands is crucial.

One of the most commonly used commands is ls, which lists the contents of a directory.

To navigate through directories, use cd followed by the directory name.

For creating new directories, mkdir is the command to use.

To copy files, use cp, and to move them, use mv.

To remove files or directories, rm is the command you need.

Understanding the Terminal

The terminal is a powerful tool in Linux that allows you to interact with the operating system using text commands. It provides a way to navigate the file system, run programs, and perform various tasks efficiently. Understanding how to use the terminal is essential for anyone looking to work with Linux command line.

With commands like ls to list files, cd to change directories, and mkdir to create directories, you can easily manage your files and folders. You can also use commands like cp to copy files, mv to move files, and rm to remove files. These basic commands form the foundation of working in the terminal.

By mastering the terminal, you can become more efficient in your tasks and gain a deeper understanding of how Linux works. It may seem intimidating at first, but with practice, you’ll soon feel comfortable navigating the Linux command line.

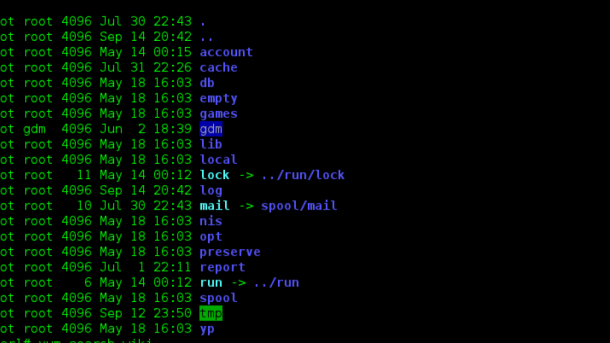

File Permissions in Linux

File Permissions in Linux are crucial for maintaining the security of your system. In Linux, each file and directory has permissions that specify who can read, write, or execute them. The three main permission categories are owner, group, and others. You can view the permissions of a file using the ls -l command in the terminal.

To change permissions, you can use the chmod command followed by a code representing the permissions you want to assign. Understanding and managing file permissions in Linux is essential for protecting your system from unauthorized access and ensuring the integrity of your files and data.

Command Line Editors: Nano and Vim

In this section, we will delve into two popular command line editors: **Nano** and **Vim**. These editors are essential tools for navigating and editing files directly from the terminal in Linux.

**Nano** is a beginner-friendly editor with simple commands displayed at the bottom of the screen. It is great for quick edits and small tasks.

On the other hand, **Vim** is a powerful editor favored by experienced users for its extensive features and customizability. It has a steep learning curve but offers unparalleled efficiency once mastered.

Whether you prefer the simplicity of Nano or the flexibility of Vim, mastering a command line editor is crucial for efficient Linux operations.

Problem Solving and Creative Thinking

Learn how to enhance your problem-solving skills and foster creative thinking through Linux Command Line Tutorials for Beginners. By mastering the command line, you will gain a deeper understanding of how to troubleshoot issues, manipulate files, and execute scripts efficiently. This hands-on approach will challenge you to think outside the box and find innovative solutions to complex problems in the world of technology. Embrace the opportunity to dive into the world of Linux and expand your knowledge of system administration and automation through practical exercises and real-world applications. Unlock the true potential of your computer by delving into the power of the Linux command line.

Obtaining Linux and Installation

To obtain Linux, **download** the distribution of your choice from the official website or a trusted source. Once downloaded, create a **bootable** USB drive or DVD. Follow the installation instructions provided by the Linux distribution. Make sure to back up any important files before installing Linux to avoid data loss. During the installation process, choose your preferred **partition** scheme and set up user accounts.

After installation is complete, you can start exploring the Linux operating system via the command line interface. Familiarize yourself with basic commands and start learning how to navigate the **file system**.

Tips and Tricks for Linux Users

– Familiarize yourself with basic commands such as ls, cd, mkdir, and rm to navigate the file system and manage files.

– Take advantage of the man command to access the manual pages for specific commands and understand their usage.

– Utilize grep to search for specific patterns within files and directories quickly.

– Use chmod to manage file permissions and control who can read, write, or execute certain files.

– Experiment with piping and redirection to combine multiple commands and streamline your workflow.

– Practice using the tab key for auto-completion to save time and avoid typos in long commands.

– Take advantage of aliases to create shortcuts for commonly used commands.

– Explore shell scripting to automate repetitive tasks and increase efficiency in your workflow.

Exploring Linux Command Operations

In Linux Command Line Tutorials for Beginners, learn essential operations for navigating the command line. Understand how to execute commands, manage files and directories, and customize your environment. Discover the power of shell scripting to automate tasks and improve efficiency. Gain insights into file-system permissions and memory management to optimize your system.

Whether you’re a novice or looking to expand your skills, these tutorials will provide a solid foundation for mastering Linux commands. Start your journey towards becoming a proficient Linux user today and unlock the full potential of this powerful operating system.

The Shell Prompt and Path

The **Shell Prompt** in Linux is where you interact with the system through commands. It typically displays your username, hostname, and the current directory. The **Path** in Linux refers to a list of directories that the system searches through for executing commands or running programs.

Understanding and navigating the shell prompt and path is crucial for efficiently working in the command line. The shell prompt can provide valuable information, such as your current location in the file system. Meanwhile, the path allows you to execute programs or scripts from any location by specifying the full path or by adding the location to the system’s path variable.

Mastering these concepts will help you become more proficient in using the Linux command line and navigating the file system.

Disclaimer and General House Rules

Disclaimer and General House Rules: Before diving into our Linux Command Line Tutorials for Beginners, it’s important to understand a few key points. First, always exercise caution when using source code from the internet, as it may contain proprietary software or malicious code. Secondly, remember that the command line is a powerful tool, so be careful when executing commands that could affect your computer system. Lastly, always be mindful of file-system permissions and ensure you have the necessary rights to perform certain actions.

Next Steps in Linux Command Line Learning

After mastering the basics of the Linux Command Line, the next steps involve diving deeper into advanced commands and concepts. **Practice** is key in gaining proficiency, so be sure to **experiment** with different commands and options.

Consider **enrolling** in a Linux training course to further enhance your skills and knowledge. These courses often cover more **complex** topics like shell scripting, automation, and system administration.

Reading **online** tutorials and forums can also be beneficial, as they provide **practical** examples and solutions to common issues. Don’t be afraid to ask questions and seek help when needed.