Unlock the full potential of your Linux operating system with our comprehensive guide to mastering the command line interface.

Understanding Linux Basics

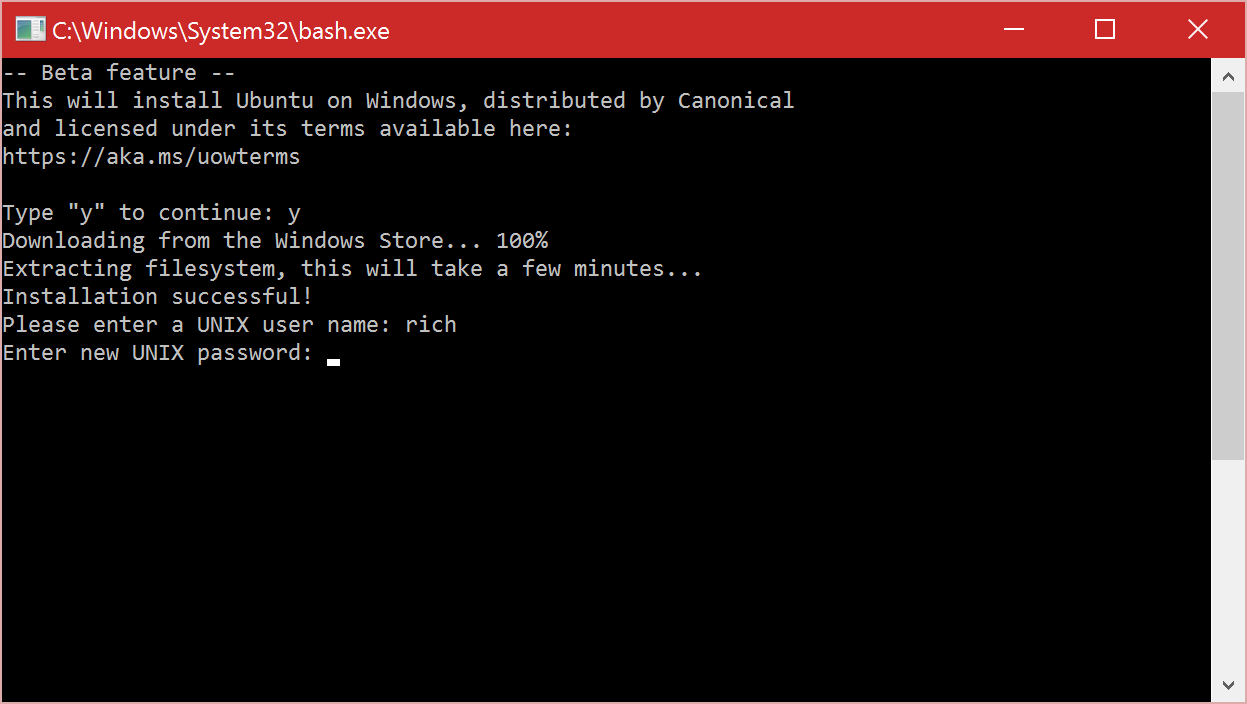

To master the Linux command line, it is essential to understand the basics of how the system operates. The Linux operating system is built on the **Linux kernel**, developed by Linus Torvalds as part of the **GNU Project**. Unlike **proprietary software** such as Microsoft Windows or MacOS, Linux is open-source, allowing users to access and modify the **source code**. When using the command line, users interact directly with the operating system, issuing commands to perform tasks like managing files, directories, and running programs. Understanding **file-system permissions** is crucial for ensuring security and access control on a Linux system.

By mastering the basics of the Linux command line, users can gain a deeper understanding of how their computer operates and develop valuable **automation** skills.

Introduction to Linux Operating Systems

Understanding the **Linux command line** is essential for interacting with the system. Through the command line, users can navigate the **file system**, manage **file-system permissions**, and run **shell scripts** for automation. This powerful tool enables precise control and customization of the system, making it a valuable skill for **DevOps** professionals and **data analysis** experts alike.

Exploring Linux Distributions

When exploring **Linux distributions**, it’s essential to get familiar with the **Linux command line**. This powerful tool allows for efficient navigation, file manipulation, and system administration. Understanding the command line can open up a world of possibilities for managing your Linux system effectively.

By mastering the **Linux command line**, you can streamline your workflow, automate tasks, and troubleshoot issues more efficiently. Whether you’re a beginner or an experienced user, diving into the command line can enhance your Linux skills significantly. With practice and patience, you can become proficient in utilizing the command line to its full potential.

Take the time to explore different **Linux distributions** and their unique features. Each distribution offers a slightly different user experience, so finding the one that suits your needs is essential. Experimenting with various distributions can help you gain a deeper understanding of Linux and find the perfect fit for your requirements.

Working with Directories in Linux

In Linux, directories are crucial for organizing files and navigating the file system. You can create directories using the mkdir command and remove them with rmdir. To list the contents of a directory, use the ls command.

You can move between directories using the cd command. To move up one directory level, use cd ... Understanding the directory structure is essential for efficient navigation and file management in Linux.

Managing Files in Linux

When working in Linux, mastering file management is crucial. The command line interface allows for efficient file manipulation. Use commands like ls to list files, cd to change directories, and mv to move files. You can also use cp to copy files, and rm to remove them. Make use of pwd to print the current working directory. Understanding file permissions and ownership is also essential.

Use chmod to change permissions and chown to change ownership. By mastering file management in Linux, you can efficiently organize and manipulate your files for better productivity.

Handling File Contents in Linux

When working with file contents in Linux, the command line is a powerful tool that can help you efficiently manage and manipulate data. To view the contents of a file, you can use the cat command followed by the name of the file. If you want to display the contents of a file one page at a time, you can use the less command.

To search for specific content within a file, you can use the grep command followed by the search term and the file name. If you need to edit a file, you can use a text editor like nano or vim. Remember to save your changes before exiting the editor.

By mastering these commands, you can easily handle file contents in Linux and improve your overall efficiency when working with data.

Introduction to Linux Shells

In the Linux command line tutorial, you will gain a fundamental understanding of Linux **shells**. Shells are interfaces that allow users to interact with the operating system through commands. The most commonly used shell is the Bash shell, which is the default on most Linux distributions. It is important to master the Linux command line as it is a powerful tool for managing files, processes, and system configurations. By learning how to navigate the command line, you will be able to efficiently perform tasks such as file manipulation, text processing, and automation.

Understanding Linux shells is essential for anyone looking to work in **DevOps** or **system administration** roles.

Navigating the Linux Command Line

To master the Linux command line, you need to understand basic commands like ls for listing files and directories, and cd for changing directories. Familiarize yourself with man pages for detailed information on commands. Practice navigating the file system using commands like pwd to show the present working directory.

Use cp to copy files, and mv to move or rename them. Learn how to create and delete directories with mkdir and rm. Explore grep for searching within files, and chmod for changing file permissions.

As you gain proficiency, you can start combining commands with pipes to enhance efficiency. Don’t be afraid to experiment and practice regularly to become fluent in the Linux command line.

Essential Linux Commands for Beginners

pwd – Print the current working directory. This command shows you where you are in the file system.

ls – List the contents of a directory. Use this command to view files and folders in the current directory.

cd – Change directory. Move between different directories with this command.

mkdir – Make a new directory. Use this command to create a new folder in the current directory.

Mastering File Operations in Linux

In Linux, mastering file operations is essential for efficient and effective command line usage. Understanding how to navigate directories, create, copy, move, and delete files are fundamental skills every Linux user should have.

Learning how to search for files, view file permissions, and manipulate file contents using commands like ls, cp, mv, and rm will greatly enhance your productivity. Utilizing commands like grep and find can help you quickly locate specific files based on their content or metadata.

By mastering file operations in Linux, you will be able to efficiently manage your files and directories, making your command line experience smoother and more effective. Explore the power of the Linux command line and take your skills to the next level.

Learning Common Linux Commands

Using grep and find will help you search for specific content within files or directories. Learning how to use chmod and chown will give you control over file permissions. Mastering sudo will allow you to execute commands with administrative privileges.

Practice using these commands in a Linux environment to enhance your command line skills. With regular practice, you’ll become proficient in using the Linux command line for various tasks.

Exploring Advanced Linux Commands

Mastering the Linux command line tutorial delves into the realm of **advanced Linux commands**. These commands go beyond the basics and allow users to perform more complex tasks and operations on their system. By exploring these advanced commands, users can streamline their workflow, automate tasks, and gain a deeper understanding of the Linux operating system.

Learning advanced Linux commands opens up a world of possibilities for users, enabling them to interact more efficiently with their system and customize their experience to suit their needs. From managing processes and files to networking and system administration, mastering these commands is essential for anyone looking to become proficient in Linux.

By familiarizing yourself with advanced Linux commands, you can take your skills to the next level and become a more efficient and knowledgeable Linux user. Whether you are a beginner looking to expand your knowledge or an experienced user seeking to enhance your expertise, delving into advanced Linux commands is a worthwhile endeavor.

Understanding Linux Editors and Utilities

Linux editors and utilities are essential tools for navigating the command line efficiently. Understanding how to use these tools can greatly enhance your productivity and streamline your workflow.

One of the most popular editors in Linux is **Vi**. Vi is a powerful text editor that allows for quick and efficient editing of files. Another commonly used editor is **Nano**, which is more user-friendly for beginners.

When it comes to utilities, tools like **grep** and **sed** are invaluable for searching and manipulating text within files. **Top** and **ps** are useful for monitoring system processes.

Mastering these editors and utilities will allow you to work more effectively within the Linux command line, giving you the skills needed to become a proficient Linux user.

Utilizing Linux File System Commands

In the Linux command line tutorial, mastering **Linux file system commands** is essential for navigating and managing files efficiently. Commands like **ls**, **cd**, **mkdir**, **rm**, and **cp** are key to interacting with the file system.

Understanding how to use these commands allows you to create, delete, copy, move, and list files and directories. Additionally, learning about **permissions** and **ownership** with commands like **chmod** and **chown** is crucial for file security and access control.

Practice using these commands in different scenarios to become proficient in managing files and directories in a Linux environment. Mastering these essential commands will boost your productivity and confidence when working in the Linux command line.

Managing Processes and Users in Linux

To effectively manage processes and users in Linux, you need to have a strong command of the Linux command line. This is where you can perform tasks such as starting, stopping, and monitoring processes, as well as creating and managing users on your system.

By using commands like ps to view running processes and kill to stop a process, you can take control of your system’s performance. You can also use commands like useradd and passwd to manage users and their passwords.

Understanding how to navigate the Linux command line will empower you to efficiently handle processes and users, making your system more secure and optimized. Mastering these skills is essential for anyone looking to become proficient in Linux administration.

Enhancing Productivity with Linux Commands

The power of Linux commands lies in their flexibility and efficiency, allowing users to automate repetitive tasks and perform complex operations with ease. By mastering the Linux Command Line, users can become more proficient in managing their systems, troubleshooting issues, and increasing their overall productivity.

With the vast array of commands available in Linux, users can customize their workflow to suit their specific needs, whether they are developers, system administrators, or casual users. Taking the time to learn and practice these commands is a worthwhile investment that can pay off in increased efficiency and effectiveness in using Linux systems.

Practicing Linux Command Line Techniques

To master the Linux command line, start by familiarizing yourself with the basic commands such as ls, cd, and mkdir. Next, practice navigating through directories, creating and editing files, and managing permissions. Utilize resources such as online tutorials, books, and practice exercises to deepen your understanding. Experiment with different commands and options to gain hands-on experience. Additionally, consider taking a Linux training course or joining a community of Linux users to enhance your skills and knowledge.

By consistently practicing and exploring various command line techniques, you can become proficient in using Linux for various tasks and projects.

Conclusion and Further Resources

In conclusion, mastering the Linux command line is a valuable skill that can greatly enhance your efficiency and productivity as a computer user. By familiarizing yourself with the command line interface, you can gain a deeper understanding of how your operating system works and have more control over your system.

For further resources, consider taking Linux training courses or workshops to deepen your knowledge and skills. Additionally, there are many online tutorials, forums, and websites dedicated to helping users learn and master the Linux command line.

Remember, practice makes perfect, so don’t be afraid to experiment and try out different commands on your own system. With dedication and persistence, you’ll soon become a proficient Linux user, able to navigate the command line with ease.

Keep learning and exploring the world of Linux, and you’ll be well on your way to becoming a skilled and knowledgeable user.