In this beginner-friendly tutorial, we will explore the fundamentals of deploying applications with Kubernetes.

Setting up a Kubernetes cluster

– Kubernetes Deployment Tutorial For Beginners

– Linux training

To set up a Kubernetes cluster, you will need to start by installing a container runtime such as Docker. This will allow you to run containers on your nodes.

Next, you will need to install kubeadm, a tool used to set up a Kubernetes cluster. Once kubeadm is installed, you can initialize your cluster with the command “kubeadm init.” This will create a Kubernetes control plane on your master node.

After initializing the cluster, you can join other nodes to the cluster using the token provided by kubeadm. This will allow you to distribute the workload across multiple nodes.

Deploying applications on Kubernetes

To deploy applications on Kubernetes, first ensure you have a Kubernetes cluster set up.

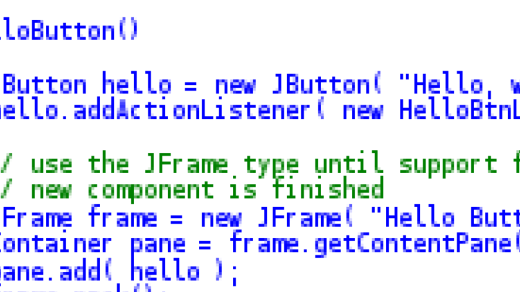

Next, create a deployment YAML file that specifies the container image, ports, and other necessary configurations.

Apply the deployment file using the `kubectl apply -f [file]` command to deploy the application to the cluster.

Check the status of the deployment using `kubectl get deployments` and `kubectl get pods` commands.

Scale the deployment using `kubectl scale deployment [deployment name] –replicas=[number]` to increase or decrease the number of replicas.

Monitor the deployment using `kubectl logs [pod name]` to view logs and troubleshoot any issues that may arise.

Monitoring and scaling Kubernetes deployments

To effectively monitor and scale your Kubernetes deployments, you can utilize tools such as Prometheus and Horizontal Pod Autoscaler. Prometheus allows you to collect metrics from your cluster and set up alerts based on predefined thresholds.

Horizontal Pod Autoscaler automatically adjusts the number of pods in a deployment based on CPU or memory usage, ensuring optimal resource utilization.

When monitoring your deployments, pay attention to metrics like CPU and memory usage, pod health, and any alerts triggered by Prometheus.

To scale your deployments, you can manually adjust the number of replicas in a deployment or set up Horizontal Pod Autoscaler to automatically handle scaling based on predefined metrics.

Regularly monitoring and scaling your Kubernetes deployments will help ensure optimal performance and resource utilization.